Abstraction and Reasoning Corpus

Table of Contents

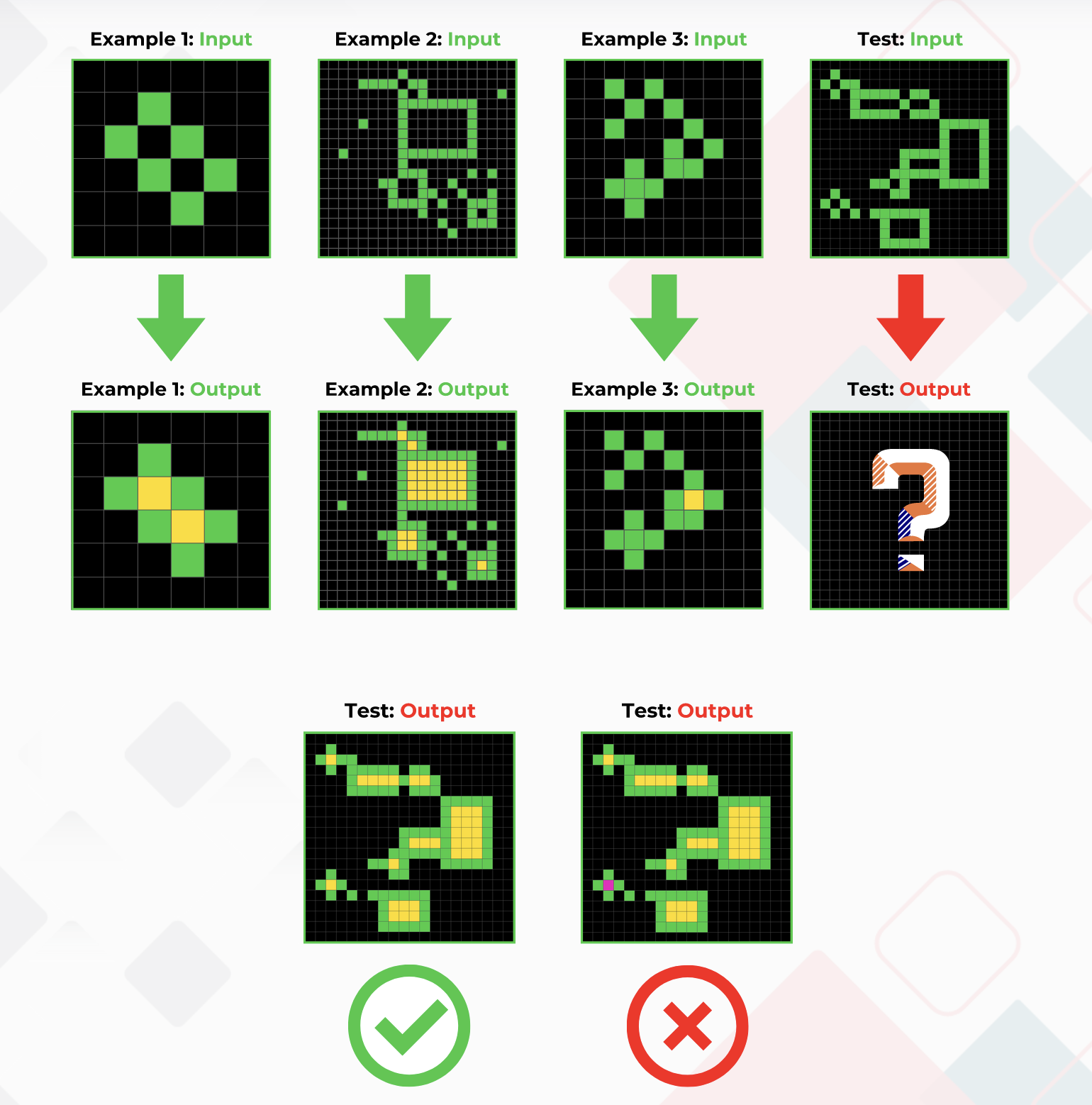

- The Abstraction and Reasoning Corpus (ARC) is a unique benchmark designed to measure AI skill acquisition and track progress towards achieving human-level AI.

- Introduced by François Chollet in 2019 in the paper: On the Measure of Intelligence [pdf]

- It is an IQ test for AI: humans can effortlessly solve an average of 80% of all ARC tasks, current algorithms can only manage up to 31%.

Figure 1: Abstraction and Reasoning Corpus

- Summary of some approaches: https://lewish.io/posts/arc-agi-2025-research-review

1. Core Knowledge Priors

Any test of intelligence is going to involve prior knowledge. ARC seeks to control for its own assumptions by explicitly listing the priors it assumes, and by avoiding reliance on any information that isn’t part of these priors (e.g. acquired knowledge such as language). The ARC priors are designed to be as close as possible to Core Knowledge priors.

Core Knowledge Priors assumed by ARC are:

- Objectness priors:

- Objection cohesion

- Object persistence

- Object influence via contact

- Goal-directedness prior

- Numbers and Counting priors

- Basic Geometry and Topology priors

- Regular, basic share are more likely than complex shapes

- Symmetries

- Shape scaling

- Ideas of Containing, being contained, being inside/outide

- Copying, repeating objects

- CompressARC: ARC-AGI without Pretraining doesn't encode any core knowledge.

- Intuitive Physics understanding from Videos challenges that

2. CompressARC: ARC-AGI without Pretraining

- By Isaac Liao and Albert Gu

https://iliao2345.github.io/blog_posts/arc_agi_without_pretraining/arc_agi_without_pretraining.html